Build AI Products by Capability, Not Category

A framework for choosing the right AI building blocks without the hype

I’m Shaili, an AI PM and educator who helps teams build responsible, high-impact AI products.

I now teach AI Product Management at the University of Washington.

New here? Start with the AI PM Readiness Survey — a 10-minute quiz with a personalized toolkit to guide your growth.

Right now, it feels like every team is racing to add AI. "We’re building an agent!" "Let’s launch a copilot!" "Can we plug in ChatGPT here?"

But the best product managers know: just because you can add AI doesn’t mean you should.

AI isn't free. Not financially. Not environmentally. Not in user trust.

When we reach for the most powerful tools by default, large language models, multi-step autonomous agents, we often overbuild, overpromise, and overspend. And worst of all, we risk losing the clarity of what problem we're really trying to solve.

Let’s talk about a better way to build AI products: not by category but by capability.

Don’t Build for the Label. Build for the Need.

A lot of AI products start with category thinking.

“We need a chatbot.”

“This is a GenAI copilot.”

“We’re designing an agent.”

But categories are just labels. They don’t help you make better product decisions. They don’t guide implementation. They don’t reduce risk.

What does help is thinking in terms of capabilities: the core building blocks that power your product.

Instead of asking “Are we building a chatbot?”, ask:

What capabilities does this feature actually require to solve the user’s problem?

The Four Core AI Capabilities

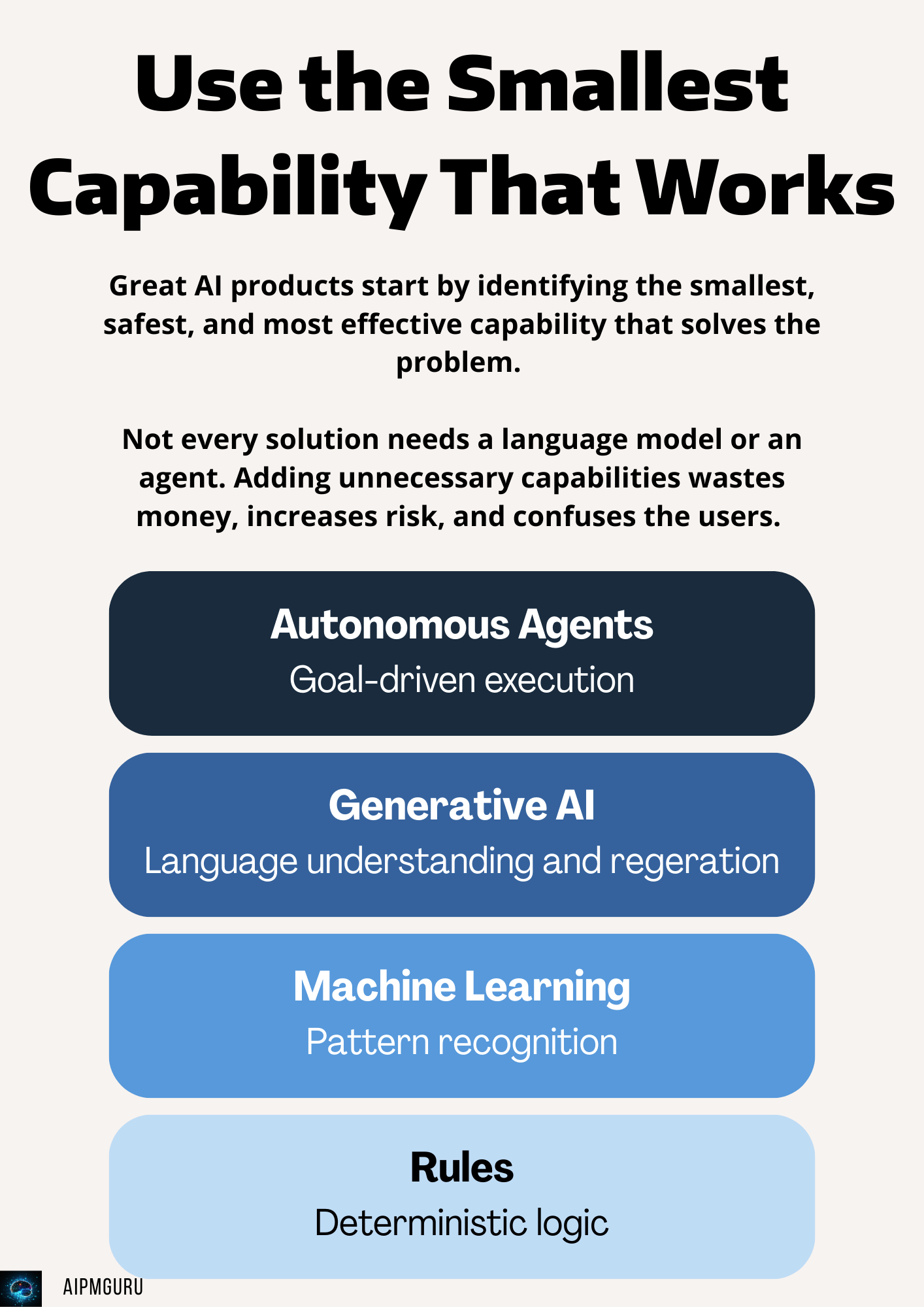

Most AI-enhanced products rely on a combination of just four foundational capabilities:

Rules: Deterministic logic. Great for enforcing compliance, setting boundaries, or creating workflows that need to be predictable and error-free.

Machine Learning: Pattern recognition. Useful when you have labeled data and want to classify, prioritize, or predict.

Generative AI: Natural language understanding and generation. It is ideal when interacting with users in freeform language, adding clarity, speed, or usability.

Autonomous Agents: Goal-driven execution. Valuable when you need the system to plan and take actions without constant user intervention.

Everything else is just variations and combinations of these four.

Not All Capabilities Carry the Same Risk

Rules are safe. When a rule fails, it’s usually a bug. You fix it and move on. But as you move up the capability stack, the risks compound.

If machine learning misclassifies something, it might annoy a user, but it’s usually recoverable.

If generative AI does something wrong, users may become confused or misinformed. Therefore, guardrails and fallback plans are needed.

But if an autonomous agent takes the wrong action, books the wrong flight, sends the wrong message, or moves money without permission, trust is broken. That’s not just recoverable with a fix. That’s reputational damage.

This is why starting at the top of the stack is so dangerous. Autonomy is the riskiest capability. It should come last, not first.

Build from the Bottom Up

Great AI products don’t start by declaring, “We’re building an agent.”

They start by identifying the smallest, safest, and most effective capability that solves the problem.

First: Can this be solved with rules?

If not, does ML provide meaningful improvement?

If not, does GenAI make it more intuitive or scalable?

Only then and only if there’s a real need, do you consider autonomy.

This layered approach is how you manage risk, earn trust, and build products that actually work.

Why PMs Default to AI (Even When It’s the Wrong Tool)

It’s not hard to see why so many product teams overuse AI.

There’s pressure from above to “do something with AI.”

There’s FOMO; everyone else is launching copilots and agents.

And often, there’s no clear decision-making framework. So we chase trends instead of needs.

But the hidden costs are real.

Using GenAI when you don’t need it slows down your product. It increases your latency and GPU bills. It complicates your testing and QA. It burns more energy and computing. And it leaves users guessing what the system might do next.

The Principle: Use the Smallest Capability That Works

This is the idea I want PMs to carry forward:

Use the smallest capability that solves the problem.

Rules are reliable.

ML can accelerate decision-making.

GenAI can make things feel fluid and intuitive.

Agents can extend functionality, but only when the foundation is solid.

Every new capability adds complexity, cost, and risk. Make sure it also adds value.

A Better Set of Questions for AI PMs

Here’s what I ask myself and my teams when we build AI products:

Is AI even needed here, or will a simple rule do the job?

If we use ML, do we have the data, labels, and evaluation metrics to make it useful?

If we use GenAI, do we have a fallback plan when it goes wrong?

If we use autonomy, are users ready to trust it and are we ready to monitor and course-correct?

How will each capability be tested, validated, and rolled out?

Are we choosing this because it’s the best solution or just because it’s the shiniest?

Responsible AI Product Management Is Layered, Not Loud

You don’t need to lead with the word “agent” to be an AI PM.

You need to lead with judgment.

A responsible AI PM doesn’t just make technical decisions — they manage up, across, and down. That means educating stakeholders who want to slap AI on everything. It means giving engineers the clarity to build what's actually needed. And it means protecting the user from complexity they didn’t ask for.

Sometimes your most valuable contribution as an AI PM is saying:

“We don’t need AI here and here’s why.”

Your job isn’t to add AI for its sake.

It’s to solve the right problems, with the right tools, at the right time.

The best AI products aren’t the most advanced.

They’re the ones that feel intuitive and trustworthy because every layer of capability was chosen with care.

Start small. Layer thoughtfully. Build trust as you go.

That’s how real AI product leaders work.