To Agent or Not to Agent? That's the AI Product Question

AI agents are powerful. But not every task needs one. Here’s how to know when to use them and when not to.

AI agents are everywhere right now.

They research.

They write emails.

They book flights, summarize meetings, query databases, and connect to internal tools.

The hype is real.

But here’s the catch:

Just because you can build an agent doesn’t mean you should.

In fact, most AI tasks don’t need one.

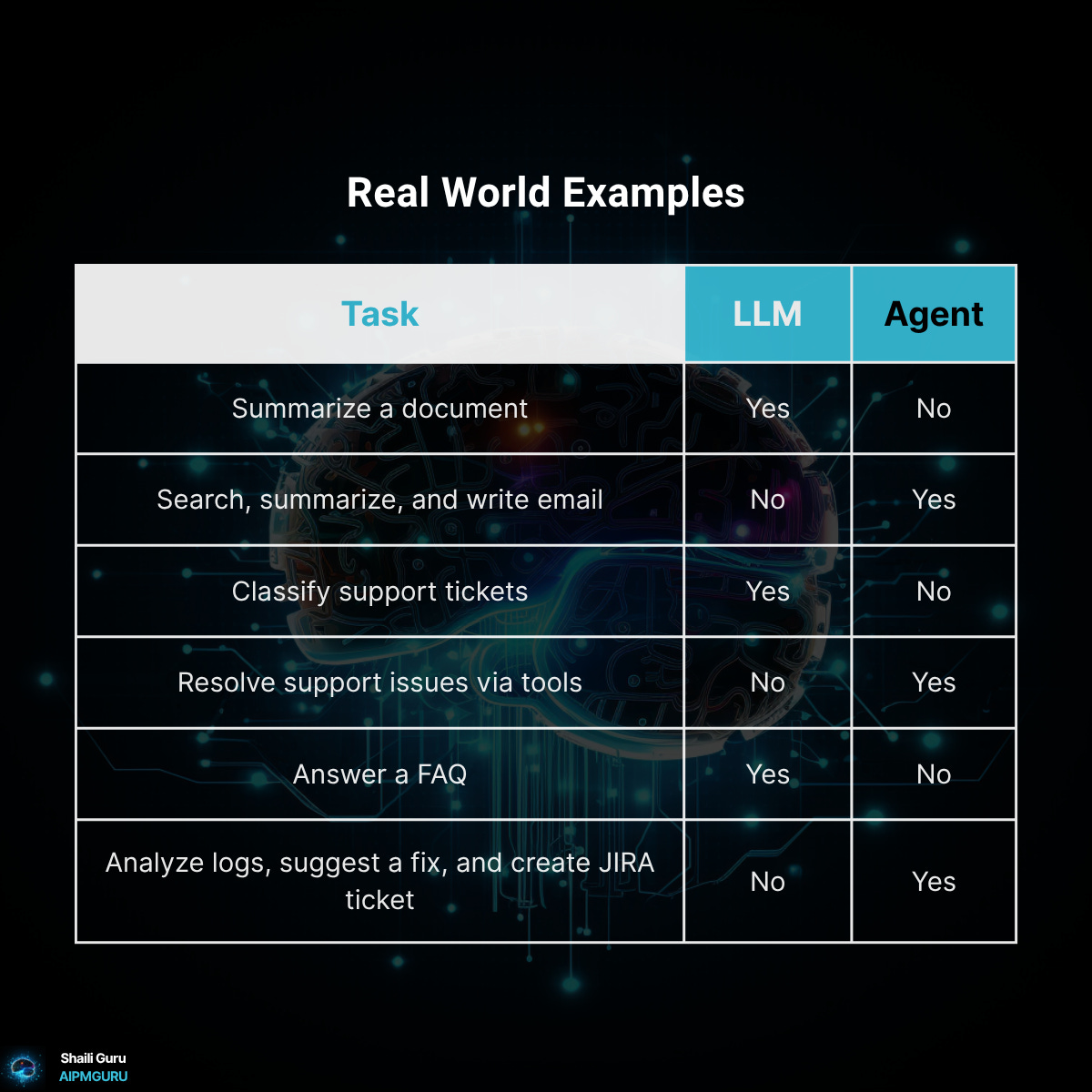

This post will help you determine when an AI agent is the right solution and when a simple LLM-powered feature is the smarter, faster choice.

What’s an AI Agent, Exactly?

If you’re new to the term, here’s the quick version:

An AI agent = LLM + tools + memory + planning

That means agents go beyond just generating text. They can:

Handle multi-step workflows

Make dynamic decisions

Execute actions using tools and APIs

But with that power comes complexity, risk, and overhead.

So the real question becomes:

When Do You Actually Need an Agent?

Here’s a simple rule of thumb:

Use an Agent When...

The task involves multiple steps

For example: “Summarize this doc → Send an email → Create a task in Notion”

You need to interact with external systems

Such as: querying a vector database, calling internal APIs, or updating a calendar

There’s a goal to achieve, not just a question to answer

For example: “Find the best vendor for X” - this requires exploration and decision-making

User input or context changes the flow

Think: onboarding experiences, support assistants, or lead qualification workflows

Don’t Use an Agent When...

You need summarization, classification, or generation

LLMs handle this well - no agent needed

The task is deterministic and stable

If the logic is always the same, agents introduce unnecessary complexity

You care more about speed and reliability than flexibility

Agents can slow things down, and it may be harder to debug or monitor

There’s no tool use or branching logic

If the LLM can do it in one shot, don’t add overhead

A Simple 3-Question Framework

Before you spin up an agent, ask yourself:

Is this task multi-step or branching?

Does it require access to tools or memory?

Will the flow vary based on user input or context?

You're probably in agent territory if the answer is yes to two or more.

If not, stick to a simpler LLM-based solution.

TL;DR

Use agents only when the task complexity demands it.

If you’re building a one-shot generation feature, keep it simple.

However, an agent might be the right choice if the task requires external actions, memory, or planning.

Knowing when to use an agent is one of the most crucial product calls you’ll make in AI-native product development.