Start With Why: Purpose-Driven AI Product Leadership in 2025

The full AI Index Report is over 400 pages, packed with charts, data, and analysis. So I thought: why not let AI help me break it down from a product manager’s perspective?

AI is everywhere in 2025. After a brief slowdown, global investment in artificial intelligence has come roaring back, making AI a central driver of business value (hai_ai_index_report_2025.pdf). In 2024 alone, companies worldwide poured a record $252 billion into AI initiatives – a 26% jump from the previous year (hai_ai_index_report_2025.pdf). Over three-quarters of organizations are now using AI in some form, up from just over half a year before (hai_ai_index_report_2025.pdf). Generative AI startups are booming, private funding nearly tripled, and the sector attracted $33.9 billion in 2024 (18.7% more than 2023) (hai_ai_index_report_2025.pdf). These numbers are staggering. But amidst this AI gold rush, a fundamental question looms: Why? Why are we building these AI products, and to what end?

Simon Sinek famously reminds us that “people don’t buy what you do; they buy why you do it.” This wisdom resonates now more than ever. In AI product management, starting with Why means anchoring every project in a clear purpose – the core problem we aim to solve or the meaningful benefit we seek to deliver. Adopting AI is not enough because everyone else is or because the technology is exciting. Without a guiding purpose, even the most powerful AI can become a solution in search of a problem. Great AI product leaders begin with clarity of purpose (the Why), then define How their teams will achieve it, and only then decide What to build. In this post, we’ll explore the latest insights from the 2025 AI Index Report through the lens of Sinek’s philosophy, showing how purpose-driven leadership can turn the era’s AI frenzy into lasting impact. We’ll look at the explosive growth in AI investment, the frontiers of model innovation, AI deployment maturity, rising regulation and ethics concerns, and the evolving developer toolchains – and discuss how each trend underscores the need to lead with a clear Why.

Riding the AI Investment Wave with Purpose

Business is “all in” on AI. The past year saw record-breaking investment and adoption of artificial intelligence in industry. U.S. private AI spending surged to $109.1 billion in 2024 (nearly 12× China’s level) as companies infused AI into products and operations (hai_ai_index_report_2025.pdf). Globally, corporate AI investment swelled to an unprecedented $252.3 billion (hai_ai_index_report_2025.pdf), part of a 13-fold increase over the past decade (hai_ai_index_report_2025.pdf). And it’s not just money being invested – trust and strategy. 78% of organizations reported using AI last year, up from 55% in 2023 (hai_ai_index_report_2025.pdf). In other words, most businesses have jumped on the AI bandwagon.

This wave is fueled by justified optimism: a growing body of research confirms that AI can boost productivity and even help narrow skill gaps across the workforce (hai_ai_index_report_2025.pdf). AI is increasingly embedded in daily life and work, from thousands of weekly autonomous taxi rides on city streets to AI copilots that speed up coding and content creation. So the hype has footing in reality – AI can deliver real value. The risk, however, is that teams implement AI features without a guiding vision in the rush to not miss out. When “everyone’s doing it,” it’s easy to lose sight of the purpose behind the product. As product managers (PMs), we must ensure this investment wave is channeled toward solving meaningful problems, not just chasing trends.

Start with Why to ride this wave effectively. Imagine two competing apps adding an AI chatbot feature. One team integrates a chatbot simply because generative AI is hot; the other does it to reduce customer wait times and improve support quality (their clear Why). It’s no surprise which chatbot ends up delighting users. The 2025 AI Index data shows massive momentum – nearly 71% of firms adopted generative AI in at least one business unit last year, up from 33% the year prior (hai_ai_index_report_2025.pdf) – but simply deploying AI isn’t a silver bullet. Many companies are still struggling to translate AI adoption into significant impact. Most report only modest benefits so far: for example, almost half of organizations using AI in service operations saw cost savings, but typically under 10%, and the most common revenue boost from AI in sales/marketing was under 5% (hai_ai_index_report_2025.pdf). The lesson is clear – adopting AI without purpose yields incremental gains at best. To unlock transformative outcomes, AI product leaders must prioritize initiatives that serve a compelling purpose for customers and the business. When you have a crystal-clear Why, you can focus on the right applications of AI – the ones that drive significant value and justify those big investments.

Frontier AI Models: Innovation Fueled by Intent

The AI Index makes one thing plain: Frontier AI development is accelerating at breakneck speed. Industry has taken the lead in pushing the boundaries – nearly 90% of notable AI models in 2024 came from industry, up from 60% in the previous year (hai_ai_index_report_2025.pdf). Tech companies are pouring resources into “frontier models” – the most advanced large language models, vision systems, and multimodal AI. Training compute for cutting-edge models doubles every ~5 months, and data scales doubles every ~8 months (hai_ai_index_report_2025.pdf). The result? We’ve seen AI systems achieve feats that were science fiction a couple of years ago. Whether it’s models acing new tough benchmarks or generating high-quality videos, the capability frontier keeps moving forward (hai_ai_index_report_2025.pdf).

Yet, a striking finding is that the frontier is becoming increasingly crowded and competitive. The performance gap between the best models and the “second-tier” is shrinking quickly. On one chatbot benchmark, the gap between the top and 10th-ranked models narrowed from 11.9% to just 5.4% within a year (hai_ai_index_report_2025.pdf). The two top models were separated by less than 1% performance by 2024 (hai_ai_index_report_2025.pdf). High-quality AI is no longer the secret sauce of only a couple of giants – it’s available from a growing number of developers (hai_ai_index_report_2025.pdf). Open-source and “open-weight” models are catching up rapidly. Last year, open models lagged far behind their closed-source counterparts. Still, by early 2025, the gap virtually disappeared – the best open models came within ~1.7% of the performance of the best proprietary model (hai_ai_index_report_2025.pdf). In other words, the playing field regarding raw tech is leveling out.

This shift carries a crucial insight for AI product managers: Technology alone won’t set you apart for long; your product’s purpose and execution will. When anyone can fine-tune a powerful model or access top-tier AI through an API, the differentiator becomes how and why you use it. If both competitors can access a state-of-the-art AI model, the one with a clear vision of the customer problem and a thoughtful design will win. This is why Sinek’s mantra matters even more at the frontier of innovation. AI PMs should indeed stay on top of model breakthroughs – whether it’s GPT-4, Claude, Med-PaLM, or the next big thing – but don’t fall into the trap of “innovation for innovation’s sake.” Instead, ask: How does this new capability enable us to fulfill our mission better? For example, in 2024 we saw a wave of large-scale medical AI models released – from general-purpose Med-Gemini to specialized EchoCLIP for heart ultrasounds (hai_ai_index_report_2025.pdf). The successful ones will be those built with a clear intent – improving diagnostic accuracy or speeding up patient care. A frontier model with purpose behind it becomes a game-changer; without purpose, it’s just another demo.

In practical terms, leading with Why in frontier innovation means prioritizing user impact over model hype. It means guiding your team to experiment boldly with new AI tech but always connecting it to a real-world outcome. Yes, we can now solve 70% of coding problems with AI where last year we could solve only 4% (hai_ai_index_report_2025.pdf), but which problems should we solve? As PMs, we translate these advances into product decisions. With so many powerful models at our fingertips, our role is to choose the innovations that align with our product vision. Remember: customers won’t be wowed for long that your app uses GPT-4 or a fancy medical model – those capabilities are becoming commonplace. They will remember that your app solved an aching pain point or delivered a personalized experience. That only happens if we start every roadmap discussion by affirming the Why. The frontier of AI is ultimately a means to an end; it’s our job to define that end and rally our teams around it.

From Adoption to Impact: Making AI Count

Having AI is not the same as making AI count. We’ve established that more companies than ever are adopting AI, but adoption alone doesn’t guarantee success. The 2025 data shows a clear pattern: Most organizations are still in the early stages of their AI journey, figuring out how to turn pilots into profit and experiments into everyday tools. While 78% of firms used AI last year (hai_ai_index_report_2025.pdf), far fewer are seeing large-scale business transformations from it. Many projects remain stuck at the proof-of-concept or limited deployment stage.

Why is that? Often, it’s because teams started with what the technology could do instead of why the customer or business needed it. They treated AI as a plug-and-play solution rather than a piece of a larger strategy. As Sinek might say, they lost sight of the why. The AI Index report reveals that even when companies do implement AI, the measurable impacts tend to be modest so far. One survey found that across different business functions, the most common scale of AI-driven improvement was small – e.g. cost savings under 10%, or revenue upticks under 5% in areas like marketing and supply chain (hai_ai_index_report_2025.pdf). In other words, plenty of firms are dabbling in AI, but few have cracked the code to major efficiency or revenue gains yet.

AI product leaders must reconnect with purpose and end-user value to cross the chasm from adoption to real impact. It’s not about AI adoption, it’s about AI integration. Successful integration means the AI solution is tightly woven into the fabric of the product or workflow to serve a clear goal. For example, consider an e-commerce company implementing an AI recommendation engine. If the goal (Why) is to genuinely help customers discover products they love (and thus improve satisfaction and sales), the PM will measure success in those terms and iterate the AI accordingly. They might find that a simpler model with a feedback loop delivers better results for their why than a black-box model that’s nominally more “accurate” but misaligned with what customers actually want. On the other hand, a company that deploys a recommendation AI just because “our competitors have one” might tick the adoption box but see no lift in customer lifetime value – and wonder why the expensive AI isn’t paying off.

Real-world examples of purpose-driven deployment are beginning to emerge. In healthcare, for instance, some hospitals have introduced AI systems to prioritize ER triage or flag high-risk patients. The ones seeing success approached it with a mission mindset: We want to save lives by speeding up critical care. They measure what matters (e.g., reduction in wait time for stroke patients), and they align doctors, nurses, and IT around using AI to achieve that goal. In contrast, other hospitals that installed fancy diagnosis algorithms without workflow integration found that doctors ignored the AI’s outputs. The difference? One started with Why, the other started with What. As an AI PM, you should constantly translate AI capabilities into the language of outcomes and impacts. Ask “So what?” for every AI feature – if it doesn’t tangibly improve the user experience or address a strategic objective, reconsider if it’s worth doing at all.

The data also suggests a mindset shift from technology-centered to human-centered metrics. Adoption is a tech-centric metric (“we use AI now”), whereas impact is human-centric (“our users/customers are better off now”). As AI leaders, we need to champion that shift in our teams and executive discussions. Celebrate the wins where AI genuinely moves the needle and be honest about the projects where it hasn’t (yet). When you keep the Why front and center, you create a culture where it’s okay to pivot or kill an AI project that isn’t delivering value – because everyone understands we’re here to solve real problems, not just to play with cool tech. In 2025 and beyond, the organizations that convert AI adoption into breakthrough impact will be those whose product leaders insist on purpose at every stage, from initial exploration to scaled execution.

Responsible AI: Aligning Innovation with Values

Amid the exponential growth of AI capabilities, questions of ethics, trust, and responsibility have never been more urgent. Just as AI is transforming what we build, it’s challenging us to think deeply about how we build it and why. Governments around the world are no longer sitting on the sidelines, they’re stepping in with new rules and frameworks to ensure that AI is developed and deployed responsibly. In 2024, U.S. federal agencies introduced 59 AI-related regulations – more than double the number in 2023 (hai_ai_index_report_2025.pdf). And across 75 countries, legislative mentions of “AI” rose over 21% year-over-year, part of a ninefold increase since 2016 (hai_ai_index_report_2025.pdf). Europe made history by passing the EU AI Act, the first comprehensive AI law in a major economy, which takes a risk-based approach to governing AI and holds providers of high-risk AI systems to strict obligations (hai_ai_index_report_2025.pdf). In other words, the era of relatively unchecked AI development is ending – an age of accountability is dawning.

Why such urgency from policymakers? Because trust remains a major challenge with AI systems today. Issues like data privacy breaches, algorithmic biases, and the spread of AI-generated misinformation have shaken public confidence. Fewer people believe AI companies will safeguard their data, and concerns about fairness and bias persist (hai_ai_index_report_2025.pdf). In 2024, we witnessed AI deepfakes and misinformation swirling around elections globally (hai_ai_index_report_2025.pdf), raising hard questions about AI’s impact on society. Even at the cutting edge of AI research, we see that large models touted as “unbiased” still exhibit troubling biases – for example, GPT-4 and Claude 3 (trained with techniques to reduce bias) nonetheless showed implicit biases, associating negative terms more with Black individuals and stereotyping genders in career roles (hai_ai_index_report_2025.pdf). These findings underscore that ethical challenges in AI are real and persistent. If we ignore them, we risk building products that amplify inequities or erode user trust.

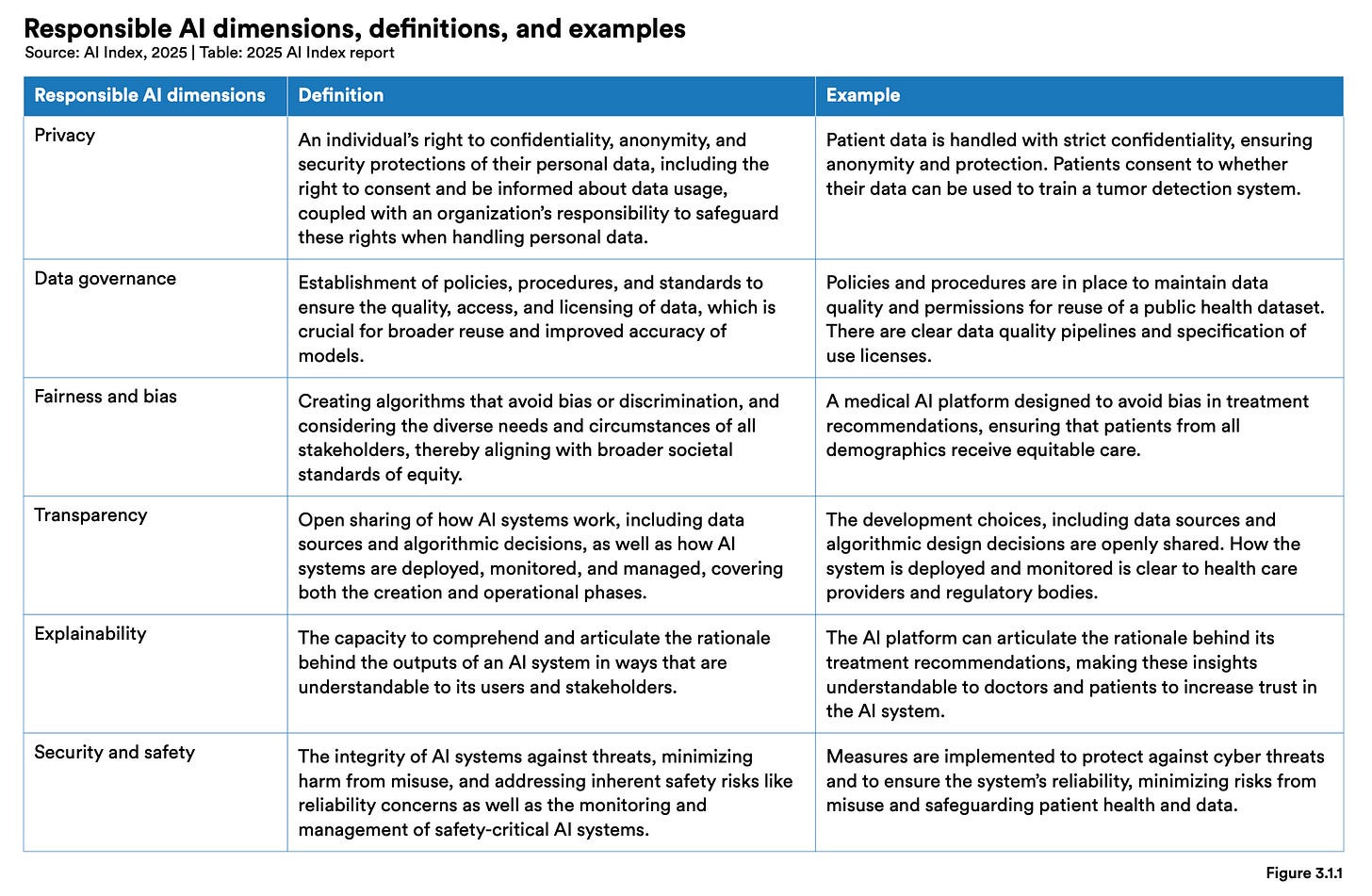

For AI product leaders, aligning our work with strong ethical principles isn’t just a nice-to-have – it’s foundational to our Why. If your product’s purpose is, say, to help people manage their finances wisely, that inherently comes with a duty to do so fairly, transparently, and securely. Responsible AI should be woven into the very purpose of the product. That means from day one of development, we consider questions like: Are we using data in a way that respects user privacy? Could our model’s predictions be unfairly biased against a group of users? How will we handle mistakes or misuse of our AI? Embedding these considerations requires intentional leadership. It means bringing diverse voices into AI design (including domain experts, ethicists, and the communities affected by our technology). It also means staying up to date on evolving best practices and regulations. The 2025 Index reports that global cooperation on AI governance intensified in 2024, with organizations like the OECD, United Nations, and African Union all publishing frameworks for responsible AI, focusing on principles like transparency, explainability, and trustworthiness (hai_ai_index_report_2025.pdf). There is a growing global consensus on what responsible AI looks like, and PMs should proactively align with it rather than waiting to be forced by law.

Leading with Why here translates to articulating a clear ethical purpose for your AI product. For example, a PM might define the product’s mission as “help users improve their health with AI in a way that is private, safe, and equitable.” That statement guides not only what features you build but how you build them. When tough trade-offs arise (e.g., faster time-to-market vs. a more thorough bias audit), your purpose and values should drive the decision. This is where aspiration meets accountability. We aspire to use AI to benefit people, and we hold ourselves accountable for doing it the right way. Increasingly, regulators will hold us accountable too – but the truly purpose-driven teams won’t need the law to tell them. They’ll exceed the standards because their reputation and Why depend on it. And make no mistake, ethical leadership is also good business: users are more likely to embrace AI products they trust. A recent global survey showed a rise in optimism about AI’s benefits alongside these efforts to govern AI responsibly (hai_ai_index_report_2025.pdf). People want to believe in AI’s promise – it’s on us to justify that belief by delivering AI solutions that are as principled as they are powerful.

Empowering AI Teams with the Right Tools (and the Right Why)

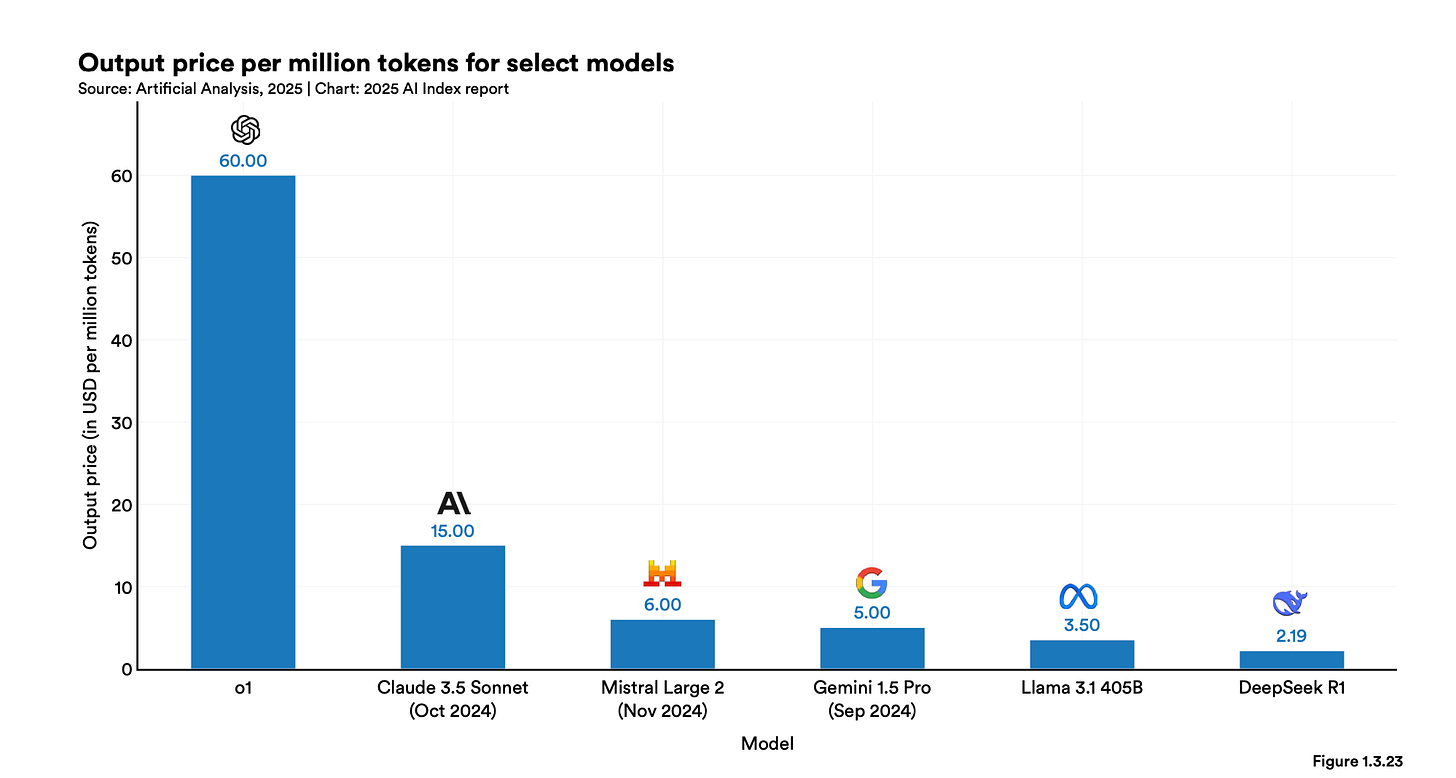

Building AI products in 2025 is a team sport played with incredibly powerful tools. One of the most exciting trends from the AI Index is how the developer toolchain for AI has matured. Thanks to the open-source movement and rapid advances in hardware, AI is more accessible, affordable, and scalable than ever. Consider this: The cost to run an AI model at ChatGPT’s level (GPT-3.5) dropped 280-fold between late 2022 and late 2024 (hai_ai_index_report_2025.pdf). In parallel, energy efficiency of AI hardware improved ~40% per year (hai_ai_index_report_2025.pdf), and cheaper, specialized AI chips are becoming the norm. This means that what was cutting-edge (and costly) AI two years ago can now be deployed by a small startup or a lean corporate team with relative ease. Moreover, open-source AI models are booming – communities release new models and updates at a dizzying pace. By early 2025, open models have practically caught up with the performance of the best closed models (hai_ai_index_report_2025.pdf), erasing most of the advantage once held by a few tech giants. Tools like TensorFlow and PyTorch, Hugging Face model hubs, and an ecosystem of ML ops platforms have put world-class AI capabilities at developers’ fingertips.

For product managers, this is both a blessing and a responsibility. On one hand, our toolset to execute on ambitious AI visions has never been stronger. You can fine-tune a state-of-the-art language model on your proprietary data with just a few lines of code or plug an off-the-shelf image recognition API into your app to deliver instant magic to users. Prototyping AI features that once took months of research can now sometimes be done in a hackathon. This democratization of AI tech means innovation is limited less by technical feasibility and more by our imagination and clarity of purpose. On the other hand, with great power comes great responsibility (to borrow a phrase). Just because we can add an AI feature quickly doesn’t mean we should. It falls to the PM to be the guardian of the product’s purpose – to prevent “feature-itis” and maintain focus. With so many shiny tools available, teams might be tempted to add AI everywhere. But a purpose-driven PM will ask, “Does this new tool or model help us achieve our mission better?” If yes, awesome – the tools are ready. If not, maybe it’s a distraction.

Empowering your team with a clear Why is as important as equipping them with the right tools. Great AI PMs don’t just hand their engineers a powerful model and say, “Go crazy.” They frame the problem and context so the team knows what success looks like. For example, instead of “Let’s use this new NLP API in our app,” a PM might say, “Our goal is to reduce onboarding friction for new users – maybe we can use this NLP model to create a smarter tutorial. Let’s experiment.” In the latter case, the team has a guiding star (reduce friction) while wielding the tool, which makes their creative work far more directed and likely to succeed. It’s the classic Why -> How -> What in action: Why (reduce friction for users), How (perhaps by using NLP to personalize the tutorial flow), What (implement the chosen solution with the API). When teams internalize the Why, they become more autonomous and innovative because they understand the endgame. A developer or data scientist who knows why a feature matters will make dozens of micro-decisions in implementation that align with that purpose, often coming up with better solutions than anyone could have planned top-down.

Team-building in the AI era also means assembling a diverse crew that believes in the mission. An AI product team might include software engineers, data scientists, UX designers, data ethicists, and domain experts. As a PM, hiring and development should emphasize shared purpose. Skills can be learned, but genuine passion for the Why of your product is invaluable. A group of true believers will move mountains with the tools at hand. We see this in many open-source AI communities – people around the world collaborate on a project not because they’re paid but because they’re inspired by the goal (think of those building AI for climate science or for assisting disabled users). That same spirit can infuse any AI team. Encourage your team to engage with the big picture: for instance, have your data scientists talk to end-users or hear directly from stakeholders about why the product matters. This fuels their motivation and often sparks insights that pure technical work might miss.

Lastly, as AI PMs, we should also invest in our own growth with purpose in mind. The career of an AI product manager in 2025 is ripe with opportunity – nearly every industry needs savvy AI leadership, as evidenced by the explosion of AI investment and initiatives worldwide. (When an entire country like Saudi Arabia launches a $100 billion AI initiative (hai_ai_index_report_2025.pdf), you know the field is flush with resources and attention.) But not every role will resonate with your personal Why. One PM might be driven by improving education, another by advancing healthcare, another by building the coolest consumer gadgets. There’s no wrong answer, except to chase an AI role just because it’s lucrative or shiny. The most fulfilled (and effective) AI product leaders I know are intentional about aligning their career moves with their values and interests. As Sinek would put it, success comes when we are in clear pursuit of why we want it. So, even as you skill up on the latest AI technologies and frameworks, take the time to clarify your own purpose as a leader. That clarity will guide you to the right opportunities and help you inspire teams wherever you go.

Implications for AI Product Leaders: Leading with Why

Bringing it all together, what should AI product managers and aspiring PMs do to lead with purpose in this dynamic landscape? Here are some key takeaways and actions grounded in the trends we’ve discussed:

Purpose-Driven Prioritization: Focus your roadmap on AI initiatives that align with a clear value proposition. Don’t implement AI for novelty’s sake. With so many options (from generative AI to predictive analytics), use your product’s Why as the filter. Remember that broad AI adoption alone doesn’t guarantee big outcomes – many companies see only single-digit improvements (hai_ai_index_report_2025.pdf). Prioritize projects where AI truly moves the needle for your users or business mission.

Build Mission-Driven Teams: When hiring or forming AI teams, look for belief in the product vision, not just technical chops. A team that rallies around a shared purpose will be more creative, resilient, and accountable. Ensure everyone, from engineers to designers, understands why the product matters. This shared context will help your team make consistent decisions and come up with user-centric innovations even when you’re not in the room.

Innovation with Intent: Embrace cutting-edge AI developments but always tie them back to user needs. If a new frontier model or tool catches your eye, ask how it could solve a pain point or unlock a new benefit for your customers. The AI Index shows that top-tier AI capabilities are becoming widely accessible (hai_ai_index_report_2025.pdf) – your competitive advantage will come from how you apply them. Encourage experimentation, but set success criteria rooted in your product’s why (e.g. better customer support, faster delivery, more personalized learning). Kill off experiments that don’t serve a clear purpose and double down on the ones that do.

Responsible AI as a Default: Make ethics and responsibility core to your product strategy, not an afterthought. Proactively implement responsible AI practices (fairness audits, bias mitigation, transparency features) as part of feature development. Treat regulations like the EU AI Act not as compliance hurdles but as minimum standards – aim higher. You build trust and a stronger brand by designing with privacy, fairness, and transparency from the start. For example, if your AI is making life-impacting decisions, ensure there’s a human in the loop or an explanation for users. Champion ethical considerations in roadmap discussions just as strongly as you do user growth or revenue goals – this is part of leading with purpose and holding yourself accountable to it.

Career Development with Purpose: In plotting your career in AI product management, identify the domains or problems you’re passionate about. The AI field is booming with opportunities (global AI investment has grown thirteenfold in a decade (hai_ai_index_report_2025.pdf)), so it’s an employee’s market for those with the right expertise. But long-term success will come from aligning your career moves with a personal Why. Whether you care about climate, healthcare, finance, or entertainment, seek roles where you can marry your AI skills with that passion. This will not only make your work more fulfilling, but your genuine conviction will inspire colleagues and lead to better products. Continuously learning is part of this journey – invest in understanding both the latest tech trends and the societal context (policy, ethics, user sentiments) so you can be the well-rounded leader who can connect the dots.

The 2025 AI Index Report leaves no doubt that we are in a remarkable time for technology. AI capabilities are advancing rapidly, investment is pouring in, and AI is touching more and more aspects of daily life. As AI product managers, we have the privilege and responsibility of translating these developments into products that truly shape the world. This is a moment for aspiration tempered by accountability. We should aspire to leverage AI in ways that were never before possible – to tackle big challenges, to delight users with personalized experiences, and to make businesses more efficient and creative. But we must also hold ourselves accountable to doing so with integrity, purpose, and clarity.

Ultimately, people don’t adopt AI products because of how clever the algorithm is; they adopt them because of the value and meaning those products bring. When we lead with a clear Why, we ensure that value and meaning are front and center. Our teams stay focused on what really matters. Our stakeholders understand the vision. Our users feel the difference – they sense when a product was built to help them versus when it was just built to show off technology. In 2025 and beyond, leading with purpose will be the North Star for great product management. It’s what will distinguish the AI products that enrich lives from those that fade away in the hype cycle.

So here is my call to action for AI PMs everywhere: ground yourself in your Why, and let it guide every decision. Start your next planning meeting by reiterating the mission. Challenge yourself to articulate the purpose behind each feature on your roadmap. Mentor your team not just in the what of product management but the why of leadership. And don’t be afraid to speak up for ethical, user-centric choices – that’s part of owning the accountability piece of this puzzle.

As we forge ahead into uncharted AI frontiers, those who lead with clarity of purpose will create the products that endure. Let’s commit to being those leaders. By balancing ambition with empathy and vision with values, we can ensure that the AI revolution ultimately serves humanity in the best way possible. The future of AI will be written by product leaders who start with Why – let that be you. Now go forth and build the future on purpose.

🔗 Explore the full AI Index Report for 2025 here: Stanford HAI AI Index

This blog draws on insights from the AI Index Report 2025, one of the most comprehensive sources on the state of AI. For a deeper dive into benchmarks, adoption trends, and responsible AI developments, explore the full report.

Since this blog is all about AI—and built on insights from the AI Index Report—it only felt right to use AI to help write it. This article was generated with ChatGPT using prompts I created and refined. I reviewed, edited, and shaped the final message to align with my voice and intent.