CRISP-ML for Machine Learning Products

Managing products that learn, adapt, and occasionally break

This is the third post in my 8-part series on AI product management frameworks. In my first post, I explored the evolution of AI products and the unique challenges they present. My second post examined the classic CRISP-DM framework from a product perspective. Today, I'll focus on CRISP-ML, a framework specifically designed for machine learning products that addresses challenges like model decay and continuous monitoring.

Why Traditional Product Management Falls Short for ML

Machine learning products differ fundamentally from conventional software products in ways that create unique challenges for product managers:

Performance decay: Unlike traditional software that behaves consistently until code changes, ML models can degrade over time as data patterns change

Data dependencies: ML product performance is directly tied to data quality and availability, creating new dependencies outside the development team's control

Probabilistic outcomes: ML products produce probabilistic rather than deterministic results, making quality assurance more complex

Explainability challenges: Many high-performing models function as "black boxes," creating trust and adoption hurdles

Feedback loops: ML systems can create their feedback loops that affect future performance in unexpected ways

These characteristics demand a more specialized approach to product management, one that CRISP-ML helps provide.

CRISP-ML: Extending CRISP-DM for Machine Learning

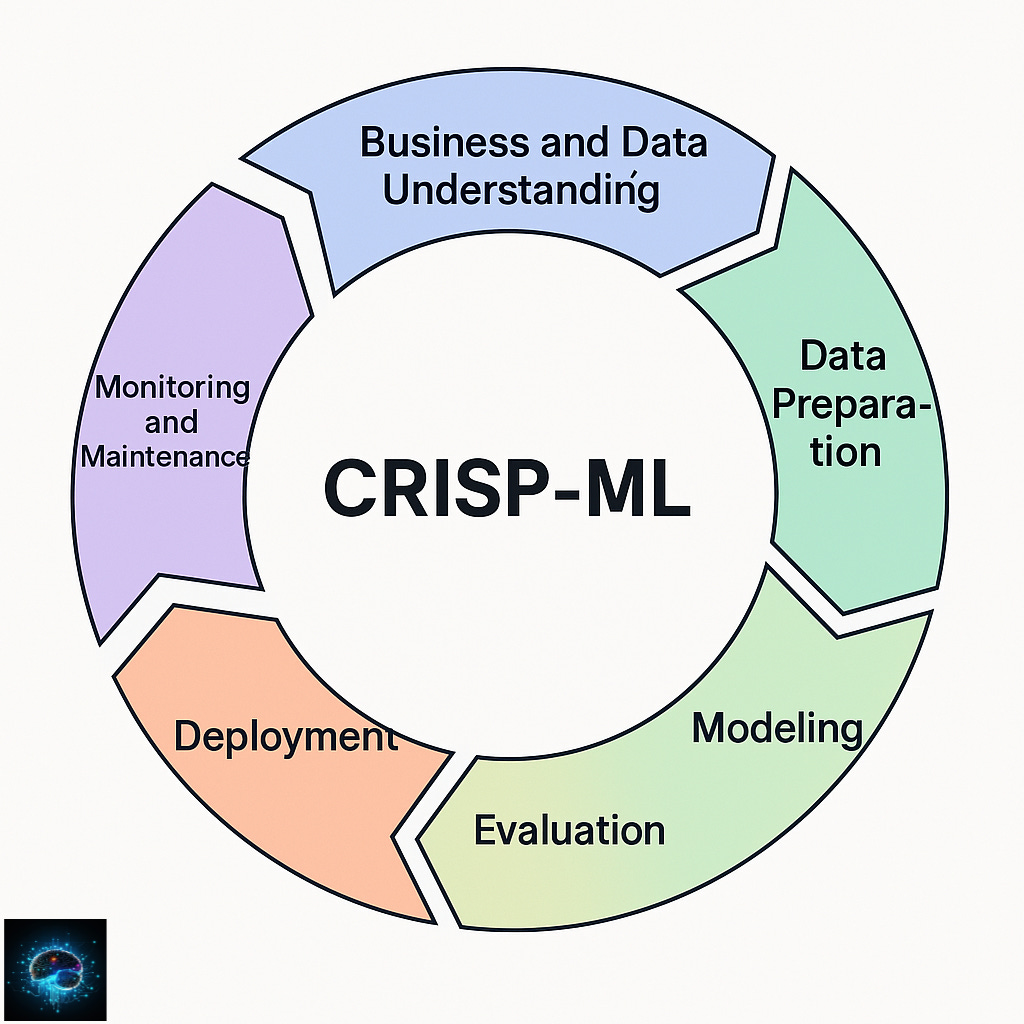

CRISP-ML (CRoss-Industry Standard Process for Machine Learning) builds upon the foundation of CRISP-DM while introducing several vital modifications directly relevant to product managers.

Key Differences in CRISP-ML

Combined Business and Data Understanding: CRISP-ML often merges these phases, acknowledging their interdependence in ML projects

Enhanced Quality Assurance: Quality checks are embedded throughout the lifecycle

Explicit Monitoring and Maintenance: A formal phase is added for post-deployment activities

Let's explore how these changes affect the product manager's role.

The PM's Guide to CRISP-ML Phases

1. Business and Data Understanding (Combined)

This combined phase recognizes that ML project viability often hinges on data availability from the outset.

The Product Manager's Role:

Simultaneous exploration: Guide parallel exploration of business needs and data realities

Technical feasibility assessment: Facilitate early assessment of whether available data can support business goals

Proof of concept planning: Define scope for initial proof of concept that validates both business value and technical feasibility

Data gap identification: Identify gaps between available data and ideal data, with plans to address critical gaps

Expectation calibration: Use data realities to set appropriate stakeholder expectations about capabilities

PM Deliverables:

Combined business-data assessment

Technical feasibility report with data dependency map

Proof of concept plan with specific validation criteria

Data acquisition roadmap (if needed)

This combined approach helps product managers avoid the common pitfall of developing business requirements that data realities cannot support.

2. Data Preparation with Quality Gates

CRISP-ML emphasizes quality assurance during data preparation to prevent propagating errors downstream.

The Product Manager's Role:

Quality gate definition: Establish clear quality criteria that must be met before proceeding

Resource prioritization: Allocate resources based on data quality impact on model performance

Test data strategy: Ensure proper separation of training, validation, and test data to prevent overfitting

Documentation requirements: Define documentation standards for data transformations

Regulatory compliance: Ensure data preparation meets regulatory requirements (GDPR, CCPA, etc.)

PM Deliverables:

Data quality gate checklist

Data preparation resource allocation plan

Test data strategy document

Documentation standards for data transformations

Compliance verification checklist

Quality gates give product managers clear checkpoints to assess progress and make go/no-go decisions before investing significant modeling resources.

3. Modeling with Experiment Tracking

CRISP-ML places greater emphasis on systematic experimentation and documentation during modeling.

The Product Manager's Role:

Experiment framework: Establish a structured approach to experimentation with clear evaluation criteria

Version control requirements: Define standards for model and data versioning

Resource allocation: Balance exploration (trying different approaches) with exploitation (refining promising approaches)

Model selection criteria: Create a decision framework for model selection that balances multiple factors (performance, interpretability, computational requirements, etc.)

Documentation standards: Set expectations for model documentation to support future maintenance

PM Deliverables:

Experiment tracking framework

Version control and documentation requirements

Resource allocation plan for modeling phase

Model selection decision matrix

Model card template

Systematic experimentation helps product managers understand tradeoffs and make informed decisions about model selection.

4. Evaluation Beyond Accuracy

CRISP-ML expands evaluation to include robustness, fairness, and explainability alongside traditional accuracy metrics.

The Product Manager's Role:

Comprehensive evaluation framework: Define evaluation criteria beyond simple accuracy metrics

Fairness assessment: Ensure models are evaluated for bias across different user groups

Robustness testing: Validate model performance under various conditions and edge cases

Explainability requirements: Define the necessary level of model explainability based on use case

User acceptance criteria: Establish user-centric evaluation measures

PM Deliverables:

Multi-dimensional evaluation framework

Fairness and bias assessment protocol

Robustness test scenarios

Explainability requirements document

User acceptance testing plan

This broader evaluation helps product managers ensure that technical performance translates to product success and user satisfaction.

5. Deployment with Integration Testing

CRISP-ML emphasizes thorough integration testing before deployment.

The Product Manager's Role:

Integration test planning: Ensure comprehensive testing of the ML system within the broader product environment

Performance benchmark establishment: Set production performance benchmarks

Contingency planning: Create fallback plans for potential deployment issues

Staged rollout strategy: Define a phased approach to minimize risk

User communication: Plan communication to users about new ML capabilities

PM Deliverables:

Integration test plan

Performance benchmark document

Deployment contingency plans

Staged rollout strategy

User communication materials

Thorough deployment planning helps product managers minimize the risks associated with introducing ML capabilities.

6. Monitoring and Maintenance (New Phase)

This additional phase in CRISP-ML acknowledges the ongoing attention ML products require after deployment.

The Product Manager's Role:

Monitoring dashboard requirements: Define what needs to be monitored and how

Performance threshold establishment: Set thresholds for model retraining

Feedback collection mechanism: Create systems to collect user feedback on model outputs

Update planning: Establish cadence and criteria for model updates

Continuous improvement process: Define how monitoring insights feed back into the development cycle

PM Deliverables:

Monitoring dashboard requirements

Retraining threshold document

Feedback collection system specification

Model update process documentation

Continuous improvement framework

This phase transforms ML products from one-time deliveries to continuously evolving systems.

CRISP-ML(Q): Embedding Quality Throughout

A further refinement, CRISP-ML(Q), places even greater emphasis on quality assurance at every stage of the process. For product managers, this means:

Defining quality dimensions: Establishing what "quality" means across multiple dimensions (performance, reliability, fairness, etc.)

Creating verification points: Embedding quality checks throughout the development process

Allocating quality resources: Ensuring sufficient resources for quality assurance activities

Balancing quality and speed: Making informed decisions about quality/speed tradeoffs

Implementing CRISP-ML: The Product Manager's Perspective

As you integrate CRISP-ML into your product development process, consider these practical implementation tips:

1. Adapt to Your Product Development Methodology

CRISP-ML can be adapted to work with various product development methodologies:

In Agile environments: Incorporate ML-specific ceremonies and artifacts while maintaining sprint cadences

In Waterfall processes: Define clear stage gates that incorporate ML-specific quality checks

In Dual-track Agile: Separate discovery (exploring ML approaches) from delivery (implementing selected models)

2. Educate Stakeholders on ML Realities

Use CRISP-ML as a framework to educate stakeholders about ML product realities:

The iterative nature of development

The critical importance of data quality

The ongoing maintenance requirements

The probabilistic nature of outcomes

3. Build Cross-functional Collaboration

CRISP-ML emphasizes collaboration between:

Data scientists and product managers

Data engineers and DevOps teams

Subject matter experts and model developers

Business stakeholders and technical teams

As product managers, our role is to facilitate this collaboration and ensure all perspectives are considered.

4. Plan for Product Lifecycle Management

CRISP-ML helps you think beyond initial development to the entire product lifecycle:

Initial development and deployment

Ongoing monitoring and maintenance

Periodic retraining and updates

Eventually, sunsetting or replacing models

The Business Impact of CRISP-ML

Adopting CRISP-ML isn't just about better processes—it directly impacts business outcomes:

Reduced risk of failure: Better quality assurance minimizes the risk of deploying underperforming models

Faster time to value: A Clearer process facilitates smoother development and deployment

Lower maintenance costs: Structured monitoring and maintenance prevent costly emergency fixes

Higher user satisfaction: More robust models with better evaluation lead to better user experiences

Responsible AI development: Quality focus helps avoid bias and fairness issues

CRISP-ML provides a valuable framework for managing the unique challenges of machine learning products. By incorporating quality assurance throughout the development process and formalizing monitoring and maintenance activities, it addresses many of the limitations of traditional CRISP-DM for modern machine learning (ML) applications.

As product managers, our role is to adapt this framework to our specific organizational context and product needs, using it to bridge the gap between technical implementation and business value.

In my next post, I'll explore CRISP-GEN AI, an emerging adaptation designed specifically for generative AI products, such as those built on large language models. I'll examine the unique product management challenges of these technologies and how to address them effectively.

This is the third post in my series on "Enhancing CRISP-DM for Modern AI Product Management." In the next installment, I’ll explore CRISP-GEN AI for generative AI products.